Understanding Selection Bias: The Silent Data Scientist’s Pitfall

In the world of data science, the quality of your output is fundamentally capped by the quality of your input. We often hear the phrase “Garbage In, Garbage Out,” but the most dangerous form of “garbage” isn’t messy formatting or missing values-it’s Selection Bias.

Selection bias occurs when the data used to train a model or conduct an analysis is not representative of the real-world population it aims to describe. When your sample is “tilted,” your conclusions will be too, leading to models that fail spectacularly when deployed in the wild.

The Mechanism of Bias

At its core, selection bias happens during the sampling phase. If certain individuals or data points are more likely to be included in a dataset than others, the resulting statistical inferences will be systematic errors rather than random noise. Unlike random error, which can be mitigated by increasing sample sizes, selection bias is structural; more data will simply lead to a more “confident” incorrect conclusion.

Common Types of Selection Bias in Data Science

To build robust models, data scientists must recognize the various “flavors” this bias takes:

- Sampling Bias: This is the most straightforward form. If you are building a model to predict national consumer trends but only collect data from users in urban tech hubs, your model will fail to account for rural or less tech-savvy demographics.

- Self-Selection (Volunteer) Bias: This occurs when individuals choose whether to participate. For example, customer satisfaction surveys often suffer from this; people with extremely positive or extremely negative experiences are more likely to respond, leaving out the “silent majority” of average users.

- Survivorship Bias: This involves focusing on the “survivors” of a process and ignoring those that were filtered out. A classic example is analyzing the features of successful startups while ignoring the thousands that failed using the same strategies.

- Attrition Bias: Common in longitudinal studies or churn modeling, this happens when specific types of participants drop out over time. If the users who stop using an app are those who found it too difficult, analyzing only the remaining power users will give a distorted view of the app’s usability.

Real-World Consequences

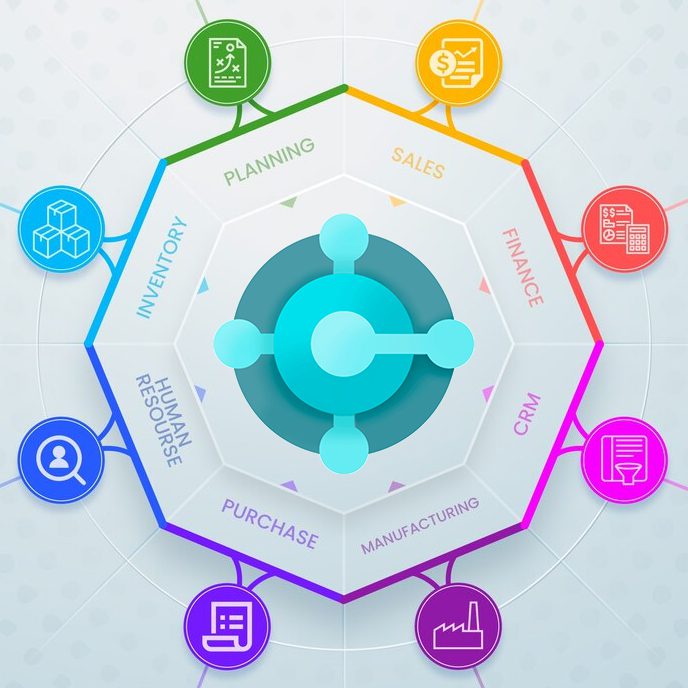

- Biased AI in Recruitment: If an AI resume screener is trained on historical data from a company that primarily hired from specific universities, the model will “learn” to penalize qualified candidates from other backgrounds, reinforcing a feedback loop of homogeneity.

- Medical Misdiagnosis: If clinical trial data predominantly features a specific ethnic group, the resulting diagnostic tools may be significantly less accurate for other populations, leading to lower quality of care.

- Financial Risk: A credit scoring model trained only on people who were already granted loans will struggle to accurately predict the risk of those previously denied, leading to missed opportunities or unforeseen losses

Conclusion

Selection bias is a subtle enemy. It hides in the gaps of what we don’t see. As data science continues to automate critical decisions in health, finance, and social infrastructure, the responsibility lies with the practitioner to look beyond the available data and ask: “Who is missing from this picture?”